The term server colocation refers to the renting of space and electricity for the operation of someone’s own servers in a third-party data center, which also houses the hardware of other companies. The word colocation is derived from the Latin words “co”= “together” and “lokus” = “place”.

In addition to this term, server-housing is also very common. The idea came up in the late 1990s when the first companies began to outsource their servers and storage systems to remote data centers. In the beginning, lack of space was the reason for using external data centers, but very quickly business advantages became important.

Important supply components such as electricity including emergency power supply, permanent cooling and the network connection are just as much a part of the service of colocation operators as the management and security of the entire infrastructure. Through the joint use of all components and the resulting cost optimization, it is possible to achieve a significant price advantage compared to a self-operated data center.

What are the reasons for colocation?

The main reasons for outsourcing a company’s IT infrastructure to an external data center are savings in running costs. In addition to the high level of physical and digital security, however, ecological aspects also lead to this decision because modern data centers are much more efficient and smarter to operate than in-house data centers.

The great advantage of server-housing is that the installed servers remain the property of the company. Any hardware and software configuration can be used. Colocation is always the first choice when a company runs highly specialized software on its servers or processes particularly sensitive data.

Even if special hardware needs to be used to meet particular specifications, there is no way around server-housing. In addition, switching to an external data center is a sensible alternative if the demands on the availability and security of the data increase and would trigger high investments in the company’s own data center.

If the allocated capacities are not sufficient at the colocation site, additional server racks can simply be added. Always provided that sufficient rack space is available. The customer always has exclusive and full control over his servers because no other person has physical access to the allocated server racks or servers. With server-housing, long-term contracts are usually concluded to avoid fluctuations in operating costs and to enable reliable budgeting.

Server-housing or managed hosting?

In contrast to server-housing, managed hosting involves renting a dedicated server that is set up and maintained by the provider, and continuously monitored and updated. Due to these circumstances, managed hosting users do not have complete and exclusive control over their servers.

To keep the administrative effort manageable, the configuration of the managed server can be selected from only a few different hardware components and operating systems. Some legacy systems require very specific system configurations that cannot be implemented with conventional standard components. In these cases, managed hosting cannot be used.

The main advantage of managed hosting is that the hosting provider takes over many administration tasks, and the customer can use the resources freed up as a result for other tasks in his company. With digital diversification, it also makes sense to run server-housing and managed cloud services at the same time. Information about Anexia Managed Hosting can be found here.

Characteristics of a colocation data center

A good data center is characterized by several features: high availability of services, secure physical access controls through biometric scanners and video surveillance cameras, redundant uninterruptible power supply systems, including emergency generators, and multi-rated cooling systems and network connections. In addition, the data center should have professional monitoring and comprehensive fire and security alarm systems.

Implement geo-redundancy strategies

By using multiple colocations, it is possible to implement geo-redundancy strategies. These usually include multiple sites in different, widely geographically separated regions, so that if one site fails due to a natural event (e.g. fire, earthquake, flood, etc.), another site can take over operations without interruption.

The classification of data centers

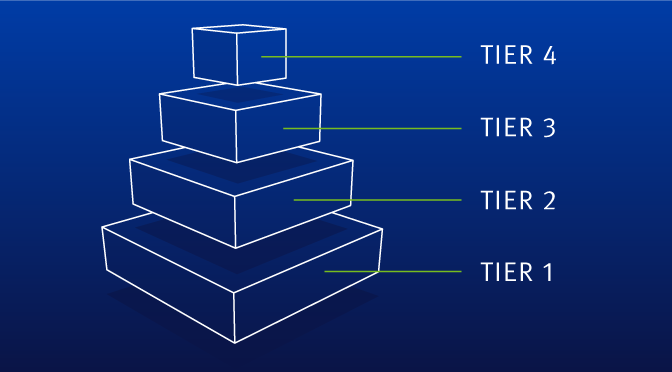

More than 25 years ago, the American Uptime Institute defined different classification levels for public data centers, which since then have set the international standard for the technical classification of data centers.

The gradations are each referred to as a tier, which means rank or layer. Depending on system availability and redundancy, a distinction is made in four different classes. This makes it possible to objectively and reliably compare the performance and availability of a site infrastructure with another site infrastructure. The individual levels are designed progressively, so that each higher-level builds on the requirements of the lower level. Depending on business requirements, different criteria for maintenance, power supply, cooling, network connectivity and fault tolerance are defined when selecting a data center. The individual gradations do not mean that a Tier-3 data center is better than a Tier-2 data center, but that the different tiers each fit different business requirements.

The second essential part of the classifications represents the operational sustainability. It refers to the respective behaviors and risks of the data center to achieve its own business goals in the long term. Topology and operational sustainability together form those performance criteria that every data center must meet. Additional factors such as building codes, weather conditions, and real estate use are not considered by the classification because these aspects vary too much from site to site and an objective comparison would not be possible.

Ultimately, it is up to the respective operator to design the expansion and implementation of the data center in a way that best suits its business model. In this way, the operator himself ensures the classification of his data center to the appropriate classification level.

The four tier classifications

The four tier topology levels provide information about the reliability and availability of a data center. The term availability refers to the probability of being able to use a system as planned.

The calculation of availability is based on the ratio of the downtime to the total time of a system. For a system to be considered “highly available”, the availability must be at least 99.99%. This corresponds to an annual downtime of less than one hour.

Importance of the Tier-1 classification

Data centers at this level operate without redundancy. There are only simply designed paths for the power and cooling supply. Consequently, the system is not fault-tolerant and maintenance during operation is not possible. The required cooling capacity for a Tier 1 site is 220 to 320 watts per square meter. The annual maximum downtime is 28.8 hours. This equates to 99.67 percent availability.

Importance of Tier-2 classification

The second tier essentially differs from the first tier in that redundant components are used and a cooling capacity of 430 to 540 watts per square meter is required. Tier-2 data centers have an availability of 99.75 percent, which equates to a maximum of 22 hours of downtime per year.

Importance of Tier 3 classification

In a Tier 3 data center, all servers are designed with redundancy and multiple active and passive supply paths are used. These provisions make the system fault-tolerant and allow for maintenance during operation.

Nevertheless, a single point of failure is possible in a Tier 3 data center. The required cooling capacity ranges from 1,070 to 1,620 watts per square meter.

Fail-safety is enhanced by multiple fire compartments. A Tier 3 system offers 99.98 percent availability and a maximum annual downtime of one hour and 36 minutes.

Importance of Tier 4 classification

A Tier 4 system adds additional fault tolerance to the Tier III topology. Even if a device fails or supply paths are interrupted, operation is not affected. To achieve this goal, all components and supply paths must be designed with redundancy. Single points of failure, which would cause an entire system failure, are thus virtually eliminated. The required cooling capacity is over 1,620 watts per square meter. A Tier 4 data center has an availability of 99.991 percent. This corresponds to a maximum downtime of 0.8 hours per year.

Who should consider server colocation?

or companies whose own premises and network connections cannot meet availability requirements, outsourcing servers to a colocation center makes sense. This makes it possible to take advantage of the many benefits of a state-of-the-art IT infrastructure while retaining control over their own servers.

In order to make a decision to outsource the servers to an external data center, the following questions, among others, should be clarified:

How much rack space is needed currently and in the future?

What are the network connectivity requirements?

What is the latency for accessing the server?

What service-level agreements are offered?

What security and compliance requirements must be met?

What physical security measures are in place?

Global Colocation Infrastructure

Detailed Informations on our Anexia World Wide Cloud with over 90 worldwide locations can be found here.