It’s playtime: what’s behind Machine Learning, Amazon Alexa and Smart Home

We have already mentioned it in our mobile trends for 2019: Machine Learning, smart home applications and advanced mobile devices such as smartwatches and voice assistants will strongly shape the world of mobile development in the upcoming years. But how do these things work in detail? How do they interact with each other? What are the challenges for developers when implementing an app? What is the potential and what are the risks?

To find the answers to these questions, Anexia has created ANX.labs: an interactive room for research and experimenting that invites the Anexians to play with new technologies. Here is a first test report of our developers.

The Idea

The idea was to develop an iPhone app that recognizes faces: how old is a person, what gender does he or she have and in what mood is the person? Additionally, a social media integration should be implemented, as well as possible interactions between the smarthome lamps Philips Hue and an Amazon Alexa.

Challenges and solutions

Face Recognition: Machine Learning & API Services

The first task was to find a way to recognize the face. Since we wanted to create a cross-platform app, we couldn’t use platform-specific iOS or Android solutions. That’s a bit of a shame, because Apple offers Core ML for the iOS, which runs fully hardware accelerated on iPhones. We therefore first tried to integrate Machine Learning (ML) models for face and emotion recognition. Since we didn’t have the necessary training, validation and test datasets to create our own ML models for this project, we first had to use freely available versions. We tried out Tensorflow models and converted them with the fitting tools to the Apple Core ML format: So Core ML was possible in this way. Android of course supports Tensorflow models natively, because the format comes directly from Google.

First tests showed a very astonishing result: on iOS the image recognition by hardware acceleration works incredibly fast. The time that an iPhone X, for example, needs to analyze a 5 megapixel image was less than 10 milliseconds! So we had the idea to analyze not only static images but even video streams. That was no problem at all for the iPhone. On the contrary, the detection was so fast that the values from the ML model also changed so quickly in the UI that only flickering numbers were visible. We throttled the evaluation artificially. Now that we could test the quality of the result, we unfortunately had to find out soon: ML models are not really suitable for sex and emotion recognition. The recognition rate was about 50% or even less. In addition, the models were huge, the iOS App was all in all about 500 MB in size. We had to find another solution. The first idea here was to look for API services that offered this functionality.

So we started the next evaluation. As it turned out, there are already a lot of services offered. Big providers like Amazon, Google or Microsoft, as well as a lot of smaller providers offer such APIs. One of them stood out in test reports: Kairos. The big advantage compared to Amazon or Google is that you can license their SDK and host it on your own servers. This is an important point that our customers value a lot, especially with regard to data protection. Face recognition in particular is a very sensitive issue. However, despite all the good reviews, we soon realized that the quality of Kairos could not convince us. Neither age and gender were well recognized, nor did we manage to get consistent values for emotions. Sometimes the API worked, sometimes it didn’t. We stopped disappointed and gave up relying on Google reviews.

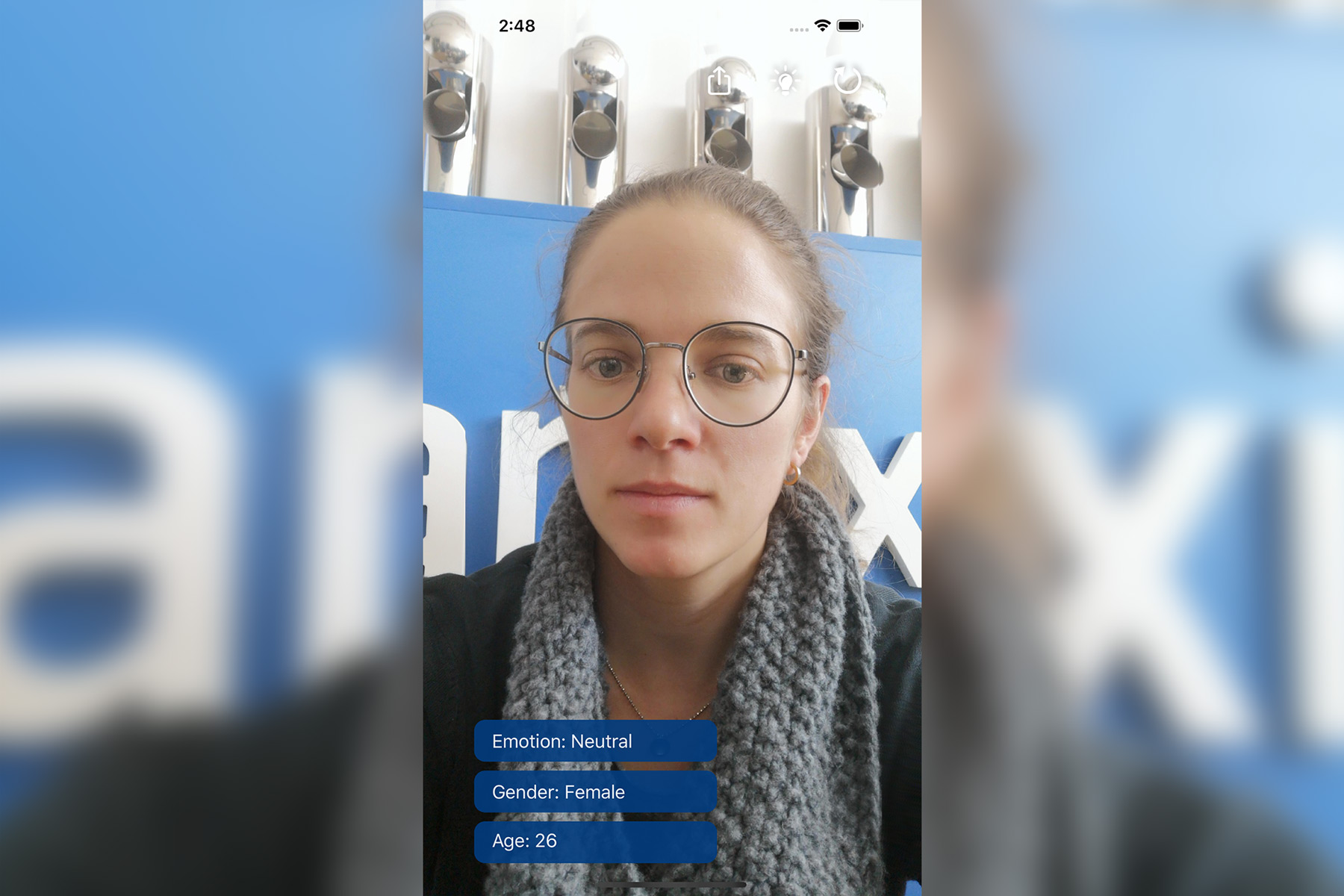

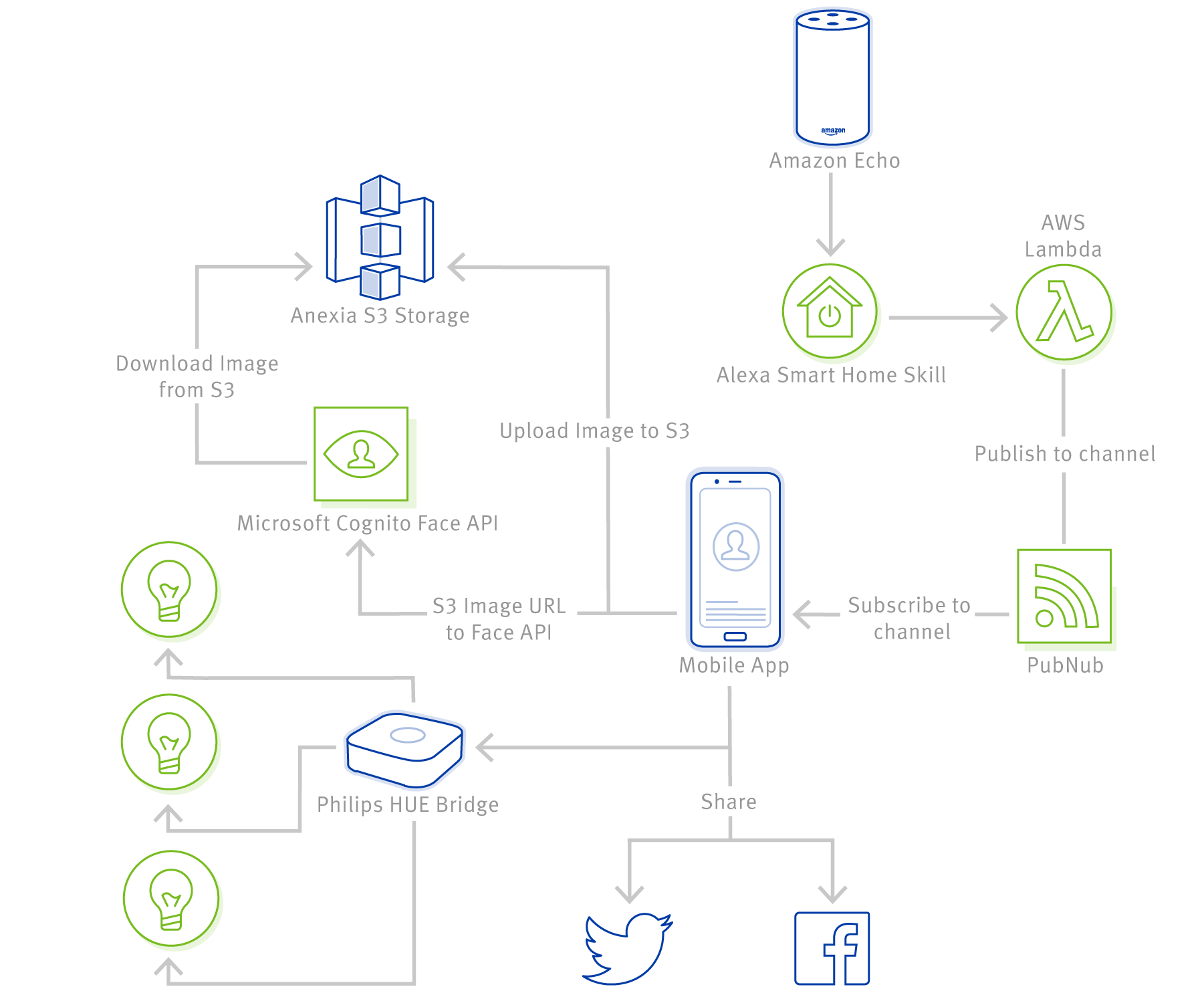

The second API attempt should be the solution: Microsoft Face API. We were amazed! Not only did the Microsoft service recognize almost every face, the returned values were incredibly accurate. The age was detected correctly in a range of 5 years in most cases, the gender almost every time. Even with emotion recognition, the API was far ahead of the competition. Microsoft offers the Face API as part of their Azure Cloud Services and as there are free developer accounts, we immediately integrated it into the app. The integration of the service was also a lot easier than with the competition. Basically, an API call with an image URL is enough. Just perfect for us! Since Anexia is a hosting provider and supports any kind of storage, we uploaded the image the user takes of himself in the app to our own S3 storage. Due to full compatibility to Amazon S3 this was also really simple.

This means that the first task was successfully completed. Our app supported face recognition and correctly displayed age, gender and emotion in the vast majority of cases.

Smart Home: Philips Hue

The next task was to integrate Philips Hue support in the app. If the app was connected to a Philips Hue installation, each emotion detected should lead to a different colored light. When the app detects joy, connected Philips Hue lamps should switch to a bright orange, anger should turn to a bright red, and so on. Basically an easy task – one might think. But here, too, the devil is in the details. As it turned out, the iOS SDK provided by Philips is not up to date and has put some obstacles in our way when integrating it into our app. So we had to use several workarounds, but in the end we were successful. Now we could finally let the connected Hue lamps shine as we wanted them to. Since this was solved, we started with the next challenge: the Alexa integration.

Amazon Alexa: Skill, AWS Lambda and App Connection

Nobody really had a great idea how to integrate Alexa into the app, but we wanted to work with it anyway. It’s about experimenting and getting to know as many technologies as possible. As so often the most important question is: What is the use case? For now, we have decided to go the easy way. Alexa is supposed to take the picture in the app as a kind of “autotimer”: “Alexa, start face recognition and take a photo”. Said and done…

The first task was the creation of an Alexa skill. Since Alexa is a service of the “normal” Amazon Marketplace, no Amazon Web Services account is needed. Creating the skill itself is easy. Amazon documented everything clearly and guides us through the creation with video tutorials and a lot of understandable documentation. You teach Alexa to which words she should react and what should happen if the words were correctly recognized by Alexa. Basically there are only two possibilities:

- Alexa calls up a web service that you create yourself

- Alexa calls an AWS Lambda function

Amazon recommends using AWS Lambda. If you take a look at the documentation for creating the web service, you will quickly understand why: it would have taken weeks to create such a service. Amazon’s security requirements are very high (which is good). The AWS Lambda Way is just as easy. It took less than 30 minutes and Alexa calls the AWS Lambda function correctly. AWS Lambda is a so-called Function-as-a-Service (FaaS) from Amazon. You don’t create a complete web service on your own, but only implement a single function and AWS takes care of providing all resources as soon as this function is called by the user. The key is the pay-per-call payment model. As in our experiment-app, this can often be much cheaper than keeping your own servers running and the effort for implementation is greatly reduced.

The next challenge was to connect the Lambda function to the app to execute the photo shooting command. Our first idea was to use push notifications. But if you take a closer look at the Apple documentation, you will soon see that push notifications are not necessarily the best way to do this. By default, they are first displayed to the user, who then has to tap them to open the app. However, we wanted to avoid this behavior so that the user is not forced to interact with the phone. We wanted Alexa to trigger the photo when the app was already open. There is this thing called “Silent Push Notifications”, which are delivered directly to the app without the user noticing, but Apple recommends using Silent Push Notifications only rarely, not more than 3 times per hour. If you don’t stick to it, it can happen that Apple’s push services simply discard a notification. The frequency was too low for us, because the goal was for the app to react every time Alexa “commands” it to. So we need another way: simple, reliable and fast.

The solution is PubNub, a service that offers real-time notifications between all devices. You can send data from server to server, from client to server or vice versa. Communication takes place in all directions and in real time. The integration was incredibly simple. There are libraries for pretty much all platforms, of course also for iOS and Android. With the AWS Lambda function we decided for a Python implementation, because there is also an SDK for Python. Within a very short time the connection was established and we could actually order Alexa to take a photo in our app.

Our app is already quite extensive. But one question still arises: If we open the app on several iPhones simultaneously, how can we tell Alexa on which device she should take the photo? We couldn’t think of any solution so far. Should we define certain words in the Alexa skill, which then only apply to a certain device? But what if the app starts on 20, 50 or 100 devices at the same time?

We will tackle this concept problem in the near future. We are not running out of ideas! ?

Conclusion

The current interim result is our so-called “Anexia Showcase App”. It can

- Ask Alexa to take a picture,

- evaluate this photo using AI,

- share the results on social media,

- and change the light in the room depending on the detected mood.

And the conclusion? There is great potential in linking the various functions and services with one another. We were also surprised how a very simple Smart Home application can amuse our colleagues. There’s still a lot potential there. But the most important thing: you should always keep your goal in mind. What do I actually want to achieve with a Smart Home App? What should be the added value of the app?

Of course, our app project was a playground, the goal here was simply to try out things. We’ve encountered various problems and learned a lot about SDKs, providers and their support behavior. The way we went was incredibly fun for all of us, we learned a lot and we are very proud of the result!

The source code for the app is available on GitHub:

https://github.com/anexia-it/anexia-showcase-app-ios

We are happy to offer what we have learned to our customers. If you are looking for a competent development partner for iOS development, mobile development in general, Alexa Skill development or Smart Home applications you are at the right address. Our project management team will be happy to advise you.